Over thirty years ago, during a clinical anesthesia seminar for CRNAs, we heard an extraordinary account. A CRNA from Duke University (name withheld for privacy) described borrowing an ultrasound (US) machine from the obstetrics unit to assist in performing an axillary nerve block. At the time, such an approach was virtually unheard of. The literature contained only a few basic reports, primarily focused on Doppler imaging. Could this have been one of the first—perhaps the first—presentations at a professional conference describing the use of ultrasound for a peripheral nerve block?

While the answer to that question proves elusive, what is clear is that from those early years of using US to perform blocks, it is unlikely that anyone envisioned what the future held. Instead of looking back three decades or more, let’s speculate on what things might be like three decades hence. In doing so, we acknowledge that predictions are fraught with hazard, recalling the famous quote by Yogi Berra, who said, “It’s tough to make predictions, especially about the future.” We’ll strive to remain grounded in what we’ve observed in the ongoing evolution of scientific and technological domains.

Table of Contents

What is ultrasound?

Consider the ingenuity of those who first proposed using sound waves—similar to how bats navigate and locate prey in darkness—to noninvasively identify structures beneath the skin. The earliest medical applications emerged in obstetrics in the late 1950s, where ultrasound was used to assess fetal viability and anatomy. While the human ear detects sound between 20 and 20,000 cycles per second, clinical and diagnostic ultrasound operates between 2 and 15 million cycles per second—well above our hearing range—hence the term “ultrasound.”

US technology has advanced enormously, and we now see it being used not only for nerve blocks and line placements, but perioperatively for cardiac, vascular, airway, pulmonary, gastrointestinal, and many other assessments. Just look at what has evolved in fetal US imaging, where the traditional 2-dimensional rendering in white, gray, and black can now, with carefully designed algorithms, provide a color, 3-dimensional image that resembles a glossy postcard you might purchase. Consider the many CRNAs, skillful in TEE applications, who can assess myocardial function in real time during surgical anesthesia! And what of the many CRNAs who use US to determine the presence of gastric contents and for airway assessment?

Parallel advancements in robotics and artificial intelligence: Menage-a-trois?

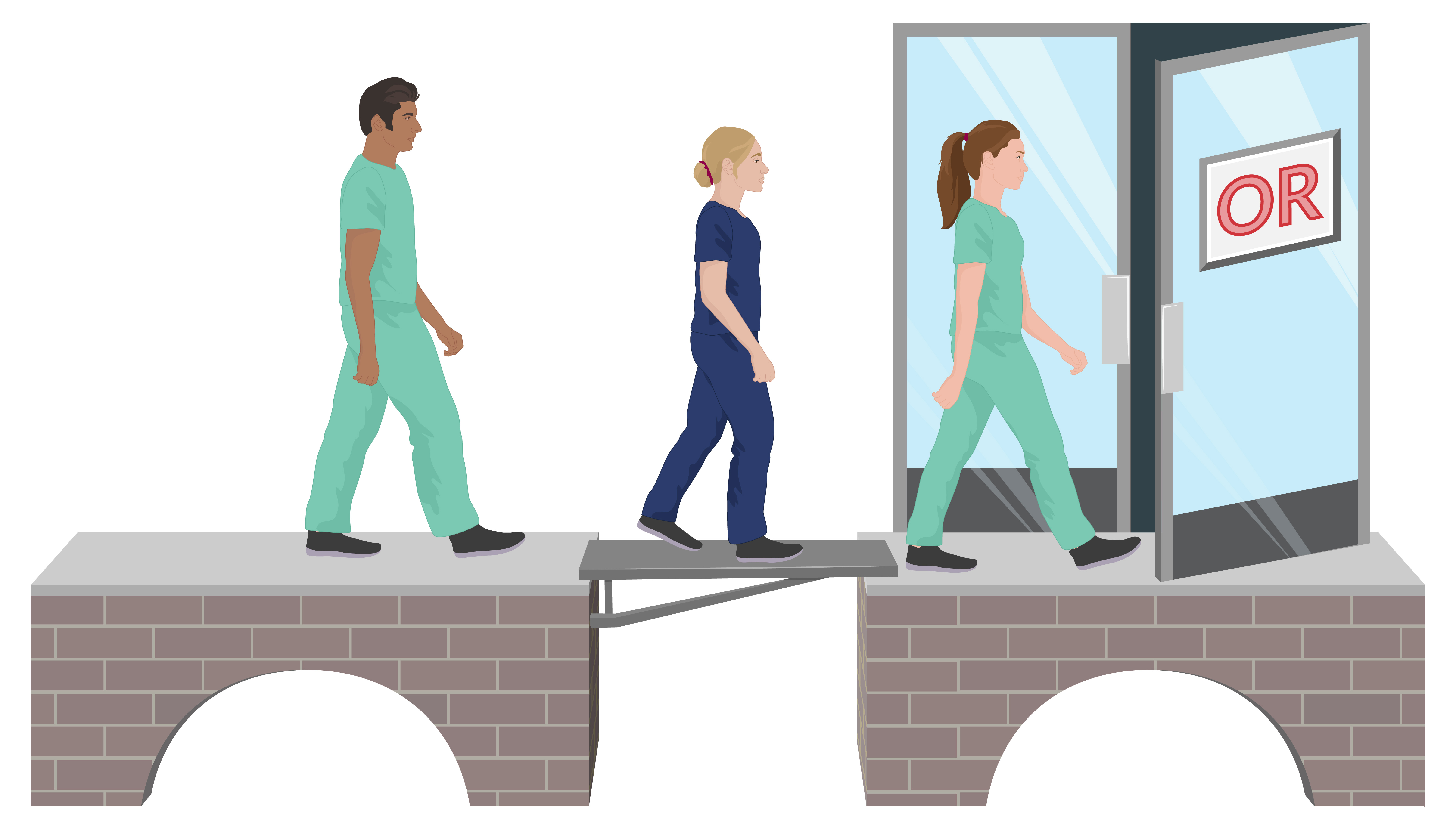

Think of the advancements in robotics where joystick control of a robot resulted in nerve and facet blocks, eventually in live subjects, and the Kepler intubation system, where a robot safely and effectively intubates live patients on the first attempt. Robotic systems have grown in complexity, capability and are now integrated into a vast number of procedures amenable to their application. Surgical robots continue gaining traction, though, as discussed in a previous blog post, comparative outcome benefits compared to traditional approaches remain uncertain.

Artificial intelligence (AI) continues to experience exponential advances, and in healthcare alone, its ability to access universal data sources nearly instantaneously, and when wedded to certain algorithms, can predict, detect, modify, and urge select patient interventions. Machine learning and deep learning provide patient-centered planning, precise interpretations of imaging, and recommendations on best practices. Many of our readers are likely familiar with—and may already use—the Hypotension Prediction Index (HPI) software from Edwards Lifesciences. This platform combines artificial intelligence with advanced algorithms to analyze real-time hemodynamic data and generate predictive indicators of impending hypotension.

Consider also the Accuro Neuraxial Guidance system—a portable, AI-enabled device powered by the proprietary SpineNav3D™ algorithm, designed specifically for neuraxial anesthesia. It identifies key anatomical landmarks, provides real-time mapping, and has been shown to shorten the learning curve for accurate block needle placement significantly.

Advances in robotics and US are occurring at an astonishing rate, and there appears to be little to slow their evolution. While federal funding for research and development may be declining across the healthcare spectrum, the enormous commercialization potential associated with the marriage of US, AI, and machine learning represents a manage-a-trios likely to fuel work in the domain. Only the sky may be the limit.

What might the future look like?

While a marriage between two individuals at baseline has an uncertain course, the long-term stability and strength of the fusion of US, AI, and robotics is not likely to suffer fracture. Consider the remarkable advancements in MRI and CT technology over the last decade, and the resultant clarity and precision in capturing invaluable images noninvasively. US, with its two technological partners at its side, is likely to match, or even excel, what we’ve witnessed with MRI and CT, and importantly, at a fraction of the cost.

While MRI and CT units remain large, stationary systems, ultrasound offers unmatched portability. With continued miniaturization of robotic components and seamless integration of wireless analytics, the potential for expanding ultrasound anesthesia applications is virtually limitless. These advancements also promise to educate and train novice CRNAs, enabling highly realistic simulation experiences. Soon, precise, real-time, 3D images of subsurface anatomy will be available directly at the point of care—at the patient’s bedside—for immediate interpretation and intervention.

US on a needle point, embedded into a glove, and virtual renditions

Let’s allow our imaginations to run a bit wild; maybe a few metaphors will help to stir your thoughts. Consider:

- A wild horse: powerful, unending energy, with an unbridled exploratory landscape.

- The ocean: spectacular species diversity and enormous depth and breadth.

- The universe: unparalleled expansiveness that has no obvious boundaries.

So here we go with three ideas, catalyzed by just pondering the possibilities. In each case, we imagined the manage-a-trois discussed earlier. We don’t think you’ll find these in the current literature, but maybe sometime in the next 30 years–or sooner!.

US on a needle point

You are performing a nerve block, and the needle shaft, using nanotechnology, is enabled with US technology. It not only senses as it passes through tissue and relevant anatomical features but also continuously voices the precise location of the needle’s tip to you and wirelessly sends real-time tissue images displayed on a screen for guidance.

US embedded into a glove that you wear

As part of your multimodal anesthetic, you plan to do a femoral nerve and popliteal sciatic nerve block for your patient having a total knee replacement to ensure excellent postoperative pain control. After you’ve prepped the planned injection sites, you don a sterile left glove with embedded US in each finger. Strategically placing the gloved hand over the approximate locations of the nerves, US imaging is displayed on a screen, providing incredibly detailed internal anatomy. Using a long-acting local anesthetic, your US-enabled fingers provide a 3-dimensional portrait of the target nerves and vasculature, allowing you to block safely and with unrivaled precision.

Virtual rendering

Your older, class-3 patient with debilitating COPD had a serious fall and is in great pain from a fractured clavicle that a radiograph reveals has the potential to injure the lung. You are called to the ED to provide analgesia and plan to do an interscalene nerve block. Using robotic instrumentation and US that noninvasively overlays the site, you create a hologram of the involved bone, tissues, and a close-lying lung that appears before you, hovering over the affected area as if you summoned the injured area out of the patient’s body. With the patient in a semi-sitting position, the hologram, a precise duplication of the underlying anatomy, permits you to manipulate it, turning it left, right, up, or down as you search for the best needle path. Once determined, AI guidance activates the robotic system, delivering 10 mL of 0.75% ropivacaine. Analgesia is achieved rapidly, with no compromise to patient safety.

Final comment

The future of US in the coming decades looks bright, and for CRNAs, that means we will witness developments that will bring increasingly novel imaging capabilities to the patient’s bedside. US, enhanced with robotics and AI, and further aided with nanotechnologies, will greatly assist CRNAs in optimizing the care they provide.

And what about those three futuristic applications we came up with? Would you like to brainstorm along with us? What do you envision? We’d love to hear your ideas!

Breakthroughs in anesthesia start with continual learning. Keep your skills sharp with APEX’s CRNA continuing education.